🎉 Announcing the new Puppeteer debugging site!

Without a doubt, one of the most helpful tools when working with any browser automation library is a visual debugger. We've written quite a bit about it. Since browserless is built specifically for developers, we're always striving to provide the best experience possible with debugging — and weren't satisfied with the state-of-the-art with the newer automation libraries. Simply put: headless: false isn't quite enough to debug Puppeteer, nor is any current live "REPL" out there.

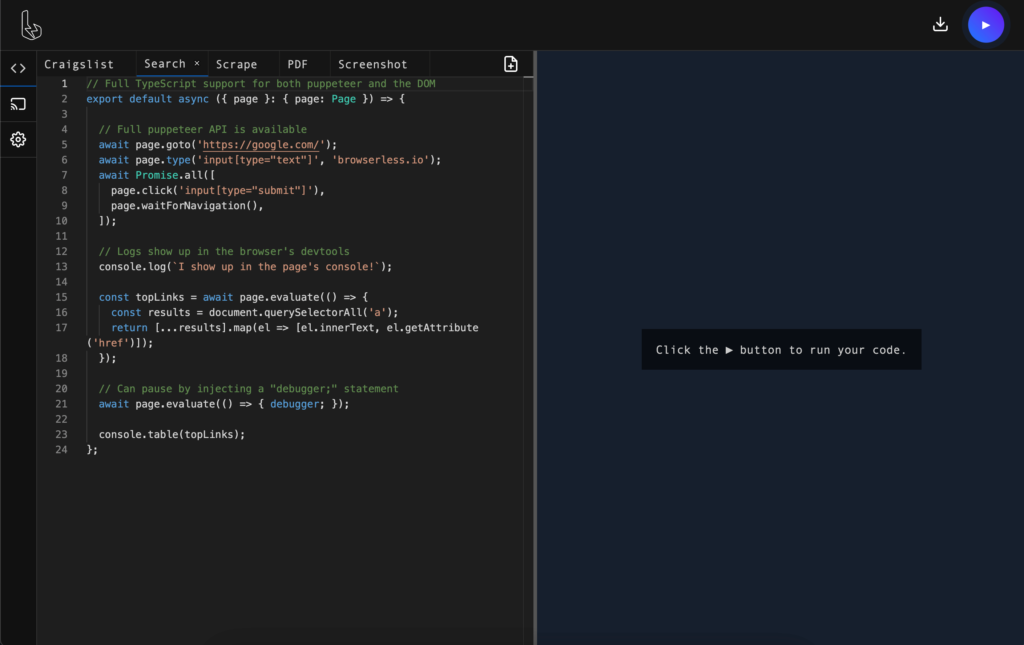

Today, we're happy to announce that our complete re-write of the debugger is now live 🎉! It includes all the features we wanted in a debugger, and more.

The new REPL web-app

We've been using this debugger internally for quite some time, and have found it incredibly useful. A short list of features include:

- An editor with syntax highlighting and auto-complete.

- Typescript support.

- A visual screencast of the browser that you can interact with.

- The ability to interact with the DevTools of the browser.

- Downloading of files when needed, useful for testing PDFs/PNGs.

- Ability to control browser-specific parameters like headless and stealth.

- Tab-based layout with the ability to save your code.

Quite a bit of planning, research and development went into building this editor out. Since we found it to be such a useful tool, we also thought it'd be interesting to go over some of its core fundamentals and how we went about building it. Let me pause and say this before we get to far: if you do one thing today, just try out the debugger. If you do two things, read below on how we built it!

Building the editor

Without a doubt the most "useful" aspect of any debugger or REPL is the editing experience. Since we're big fans of VS-code, we decided to use the fantastic monaco-editor library that powers it. Getting monaco up and running with your favorite libraries can be somewhat of a hassle, so we scoped back the amount of packages that are typed to just two for now: node and puppeteer. Given that both of these packages have typings already, it's simply a matter of loading them into the editor. Doing so is fairly straightforward once you have your editor object setup, so that's our first step!

Editor Setup

Once setup, monaco has an API for injecting typings into it as well as the configuration for the TypeScript compiler. Setting up a new TS project is relatively straightforward:

Now that everything is setup it's simply a matter of loading our types into the editor. This part turned out to be a bit tricky, since monaco wants types as a plain-string property. Thankfully, we're using WebPack to bundle up the project, so "loading" these types is as simple as requiring them as raw files:

For the purposes of our editor, we actually do some manipulation of the types so that they're globally exported (and don't need to be required). The full source can be found here, for those who want to see the whole project.

Building out the live viewer

Without a doubt, the second most helpful thing is actually seeing what the browser is up to — especially in the environment it's running in. We've seen cases where things worked just fine on local machines, but had issues in the cloud, so having the browser actually execute in the cloud was important to us for full transparency. To do this we reached into Chrome's DevTools Protocol to screencast frames of video directly back to your browser. This means that your browser is actually running the puppeteer code and viewing the session, meaning there's no other network hops being made.

To do this, we instantiate puppeteer's browser object inside of a WebWorker:

Now that we've got our browser and page boilerplate setup, it's just a matter of wiring up the screencast even so that we can draw it on the we-browser locally. Doing so isn't that complicated, however requires a bit of setup. For this particular case, we'll actually be drawing onto a <canvas> element inside the browser.

Once both are all setup, you can do the following in the browser (assuming you have a <canvas id="screencast"> in your page):

This really is just the start: we don't talk much about how keyboard and mouse events are handled, but if you want to see the full code feel free to do so here.

Handling downloads

The last bit of work is handling downloads: things that your code might run that produces some kind of static asset like a PDF or PNG file. To do that, we had to get a one more library in the project to help us out: file-type. This module, at its core, accepts a binary blob of some kind and tells us what it is. For us this mostly helps the editor to discern from PDF and PNG images, but can potentially be expanded to other formats as well.

Once the page's code has ran, we look at what the script returns and make an educated guess as to what's supposed to happen. Since this is all being ran inside a WebWorker, we can eval the code with some sand-boxing. Remember: this is all ran inside the user's browser inside a WebWorker so while it's generally a bad idea to eval most of the time, we made an exception here.

For handling of downloads, we have a pretty simple function that checks what it is:

Finally, once we have the type of file that's being returned, we can trigger the browser to download it:

Much more in the repo!

While this is just a highlight of some of the technologies we used to build the live debugger, there's a lot more that went into the project. We didn't touch upon our tab implementation, how we load puppeteer into the browser, or even how we save your code! You should definitely check out the repo and see the whole project in its glory. Finally, you should definitely check out our debugger, and make use of it when something isn't working properly in your headless workloads.