Real estate is one of the biggest global markets, with the size of professionally managed assets rising to USD 11.4 trillion in 2021. If you're in this market and want to analyze a lot of market data, you'll likely open Zillow, the most-visited real estate website in the United States.

Nowadays, free platforms like Zillow are the go-to choice for accessing and comparing thousands of properties. To gather and evaluate relevant information you need to extract that data. For this purpose, data scraping is your solution.

However, accessing data from a website like Zillow in a dependable way is quite tricky. There are paid options in the market; however, we will try a more straightforward low-cost approach. In this article, we will utilize a popular JavaScript library called puppeteer and a free online automation platform called Browserless to extract information from search results on Zillow.

Zillow scraper step #1 - Get a free account on Browserless

Before implementing the scraping script, we need access to a remote browser instance. Browserless is a headless automation platform ideal for web scraping and data extraction tasks. It’s an Open Source platform with more than 7.2K stars on GitHub. Some of the world’s largest companies use the platform daily for QA testing and data collection tasks.

To get started, we first have to create an account.

The platform offers free plans, or paid plans if we need more powerful processing power. The free tier offers up to 6 hours of usage, which is more than enough for our case.

After completing the registration process, the platform supplies us with an API key. We will use this key to access the Browserless services later on.

Zillow scraper step #2 - Set up a Node script with Puppeteer

Now that we have access to Browserless, we can initialize our project. Browserless has excellent support on several programming languages and platforms, like Python, C#, and Java, but we prefer Javascript with Node.js due to its robust environment and simple setup.

First, let's initialize a new Node project and install the puppeteer package.

$ npm init -y && npm i puppeteer-core

Puppeteer is a popular Javascript library for browser automation tasks, like web scraping. It counts more than 79K stars on GitHub, is actively maintained, and comes bundled with Chromium by default. Because we use a remote browser instance, however, we install the puppeteer-core package, which provides all the functionalities of the main puppeteer package without downloading the browser, resulting in reduced dependency artifacts. It is a great solution to reduce disk space and memory consumption while leveraging the production environment that Browserless provides.

Now that we installed the appropriate dependency, we are ready to create the script's structure.

The base structure of the script is relatively straightforward:

- First, we import the

puppeteer-corepackage. - We declare a variable

BROWSERLESS_API_KEY, whose value is the Browserless API key we retrieved from the dashboard earlier. - We declare an asynchronous function

getZillowPropertiesthat accepts two parameters:addressthat indicates the desired search area andtype, which shows the listing type, eithersale,rent, ornull(which will return results for all listing types). - We call

getZillowProperties(using top-level await. Important, this code will only work on Node 14) to get the results for properties that reside in California, CA, and are listed for sale. - Print the results into the terminal.

Inside getZillowProperties, we first connect to the remote browser instance through Browserless service by calling the connect method of the puppeteer module and providing a connection string to browserWSEndpoint property, which consists of the following parts:

- The connection base URI

wss://chrome.browserless.io - The

tokenquery-string parameter, whose value is stored inBROWSERLESS_API_KEY. - The stealth option to help ensure our requests run stealthily. It is needed for bypassing simple bot detector scripts when accessing the Zillow website.

Then we instantiate a new browser page, set the viewport to a desktop variant, and navigate the Zillow website.

Zillow scraper step #3 - Initialize a search

We have successfully established a new connection to the Zillow page.

The following steps are to initialize our search. What we are going to do is to locate the search input element in the DOM, type the desired address, and click the search button. We can inspect the DOM using our browser’s Inspector Tool locally.

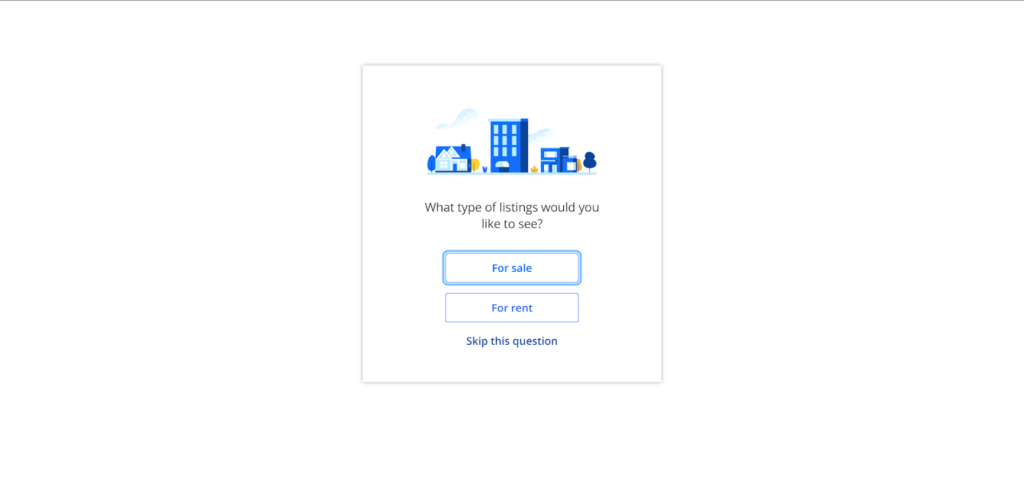

The waitForTimeout call is crucial in this stage. After pressing the search button, Zillow might prompt us to select the resulting properties' listing type.

To solve this issue, we will access the form element and press the desired button by looking at the value of the type parameter we defined in the getZillowProperties function.

Again, we call waitForTimeout to allow the page handler some time to process the keystrokes and load the next page.

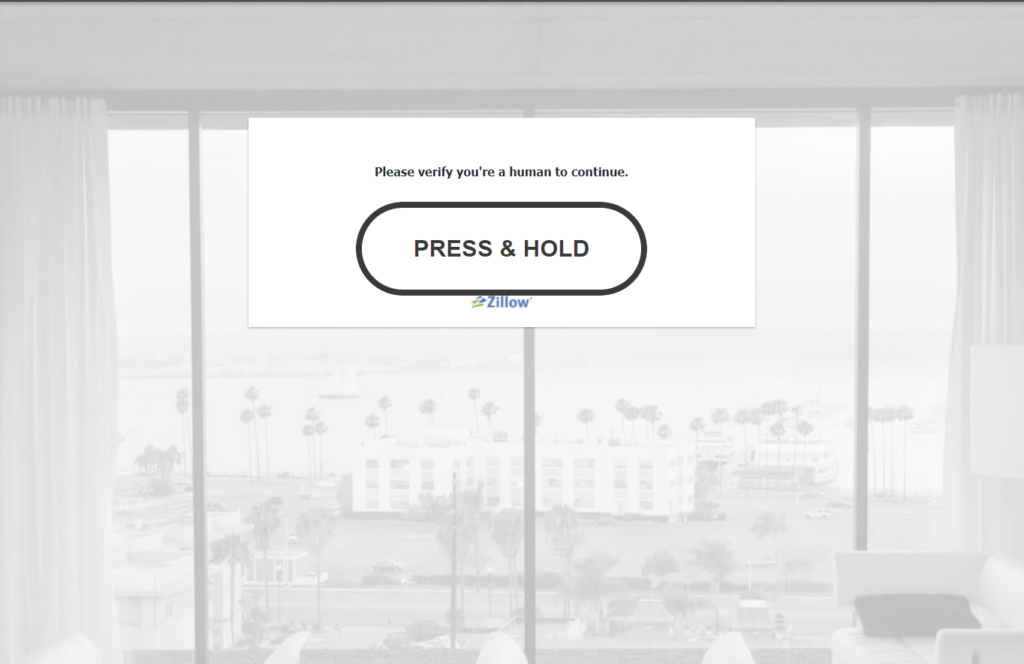

Zillow scraper step #4 - Bypassing CAPTCHA requirements

Zillow uses CAPTCHA as a bot prevention technique to reduce automated access to its services. CAPTCHA (Completely Automated Public Turing test to tell Computers and Humans Apart) is a security measure known as challenge-response authentication. This authentication type is a family of protocols in which a party initializes a challenge (usually the server), and another party must provide a valid answer (usually the client). Several CAPTCHA verification challengers exist, from simple checkboxes to complicated puzzle matching processes. In Zillow, it functions by presenting a button to the user, who then has to press it for a certain amount of time, typically around 8 seconds. After successfully executing the challenge, together with other hidden validations, the user is redirected to the search result page.

In general, bypassing CAPTCHA verification is always a pain. The security level and the sophistication behind some of those services (like reCAPCTHA by Google) make many bypassing techniques obsolete. Luckily for us, however, the challenge presented by Zillow is easy for us to handle.

To come up with a valid bypass strategy, we need to understand some aspects of the verification challenge:

- A box containing the challenge is always rendered in a constant location on the screen.

- The button is always positioned in the exact location.

- The time that it takes to solve the challenge is always the same.

- No further actions are required after solving the challenge.

- The challenge is always the same.

The above observations reduce the complexity of the solution to a handful of actions. Because we know that the button is always positioned in a specific location on the page, we can instruct the underline puppeteer engine to move the mouse to that particular point. We can then derive the solution algorithm easily:

- Get the element position that holds the CAPTCHA challenge.

- Add an offset to that position to move the pointer on top of the button.

- Emit a keydown event.

- Wait for 10 seconds to complete the challenge.

- Emit a keyup event.

- Done!

Below is the function that implements the above steps:

Because the CAPTCHA challenge might not always be present, we wrap the functionality into a try/catch block. When the app omits the CAPTCHA challenge, calling page.$eval will fail. The app redirects the user to the result page.

Zillow scraper step #5 - Retrieve search results

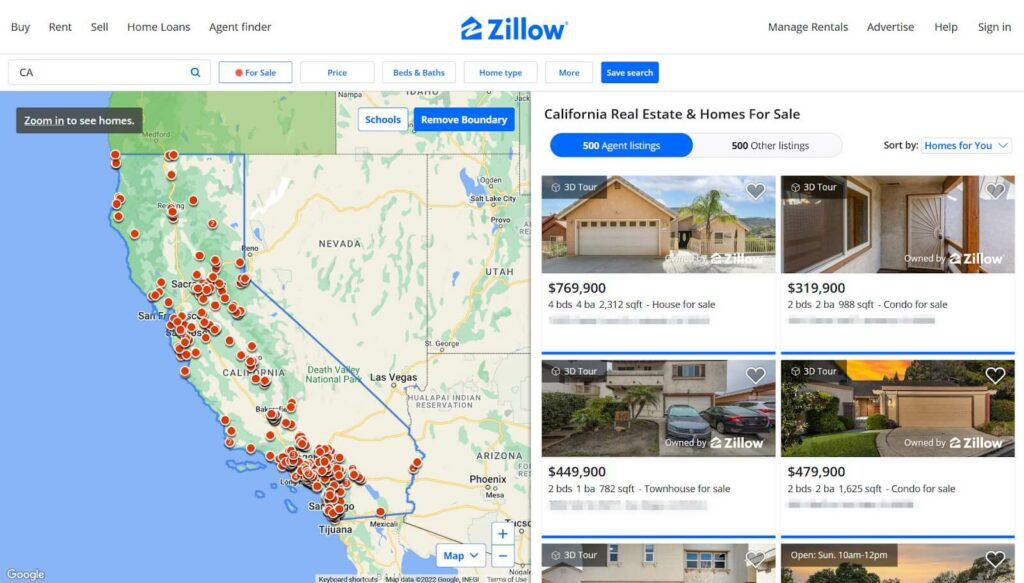

We are finally on the results page. As you can see from the below screenshot, the app renders each house into a card placeholder element. The following steps will be to read each generated property card on the page and extract information like its address, price, and other details.

If we inspect the DOM, we will find that each property card is an <article/> element whose ancestor is a div with class .photo-cards_extra-attribution. This makes our job easier because we can use querySelectorAll to gather all the articles and use the appropriate selectors to retrieve the desired info for each card. We’ll also encapsulate this functionality into its function, getZillowPropertiesInfo.

Before we wrap up this article, look at the filter invocation. Zillow’s browser-based UI is built as a SPA (Single Page Application), and the corresponding cards are fetched dynamically when they come into view. To prevent null access issues, we filter all the empty cards. If you want to get the results from all the cards presented on the page, you will need to scroll the entire view height to trigger the corresponding fetch requests before calling getZillowPropertiesInfo. There are many solutions on the web on how to do so with a puppeteer, like this one.

Executing the script

Here is the assembled script.

Running it will result in an output similar to the below:

Note: Sometimes, you might get an empty array as output from the script, which is expected; even if we took all the necessary precautions to bypass the bot prevention mechanisms, there are still cases that the CAPTCHA verification will fail. In such cases, try rerunning the script after a while or change the search terms when invoking getZillowProperties.Epilogue

In this article, we saw how we could use an automation platform like Browserless with Node.js to gather valuable data about various house properties listed on the Zillow website. We also dedicated a section to explaining how to deal with simple CAPTCHA verification challenges. We hope we taught something fabulous today. Be sure to follow us on social media for more educational content.

Get started with Browserless